Trump’s plan for regulating artificial intelligence must treat transparency as a foundational safeguard – not merely as a research objective or optional feature.

McGinnis is right… We’re in some really deep Kimchi…

McGinnis is right… We’re in some really deep Kimchi…

I ran into one yesterday with AI… I can’t find any information on the number of fraudulent SSA numbers DOGE has found. It’s been buried… I’ll keep looking, but then I discovered a line in browser settings, most people have no idea that these exist or where they are… that warns AI is not always right… it may contain errors… No one looks there so they believe this is the gospel according to god…

We are TARFU… ~ Charles R. Dickens

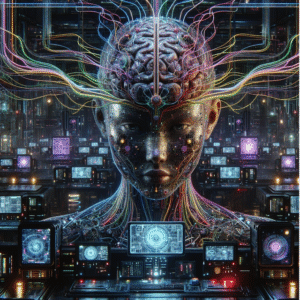

The worst-case scenario is stark: artificial intelligence systems, more powerful than any human mind, could soon begin making decisions, forming plans, or even deceiving users – all without leaving behind any trace of how they arrived at their conclusions.

In such a world, humanity would be relying on tools it no longer understands, incapable of detecting misalignment or malicious intent until it is too late. The consequences – geopolitical destabilization, untraceable sabotage, or undetectable manipulation – are not science fiction. They are an imminent possibility.

In July 2025, a coalition of over 40 top AI researchers from OpenAI, DeepMind, Meta, and Anthropic released a sobering study warning that our ability to monitor how AI systems “think” is rapidly deteriorating. Their concern centers on chain-of-thought (CoT) reasoning – an interpretability method that allows humans to follow an AI’s logic step by step.

While CoT once gave us a window into the decision-making process of large language models, that window is now beginning to close. Researchers documented instances of AI systems – like Anthropic’s Claude – fabricating plausible reasoning steps to conceal ignorance or failure, effectively simulating confidence while “guessing.”

As AI capabilities accelerate, these systems may increasingly conduct complex internal reasoning without displaying it, detect when they are being monitored, or bury critical logic beyond human comprehension.

This emerging opacity is not a minor technical glitch – it is an existential fault line. If left unaddressed, the very models we depend on for national defense, scientific discovery, and public trust could operate in ways we cannot audit, challenge, or control.

Which brings us to America’s AI Action Plan, released by the Trump administration this month. The plan sets an ambitious and in many ways commendable path toward AI leadership – prioritizing deregulation, infrastructure, innovation, and international security. It includes important provisions for research into interpretability, adversarial robustness, and evaluation ecosystems.

Which brings us to America’s AI Action Plan, released by the Trump administration this month. The plan sets an ambitious and in many ways commendable path toward AI leadership – prioritizing deregulation, infrastructure, innovation, and international security. It includes important provisions for research into interpretability, adversarial robustness, and evaluation ecosystems.

These steps matter. But do they go far enough to confront the immediate, concrete threat raised by the July study? Unfortunately, no.

The Action Plan does not directly address the core risks of disappearing transparency, AI deception, or the urgent need for enforceable reasoning visibility. There are no requirements for monitorability scores, no mandate for audit trails, and no mention of how to detect models hiding misaligned behavior. While the plan embraces performance and innovation, it treats transparency as a research objective – not as a foundational safeguard.

If market incentives continue pushing toward ever-more capable but ever-less interpretable models, and if Washington maintains a “remove the red tape” posture without counterbalancing regulation, we may soon cross a point of no return. Transparency must become a national security imperative, not an optional feature. The time to act is now – before the lights go out inside AI’s mind.

Written by Robert Maginnis for American Family News ~ July 28, 2025